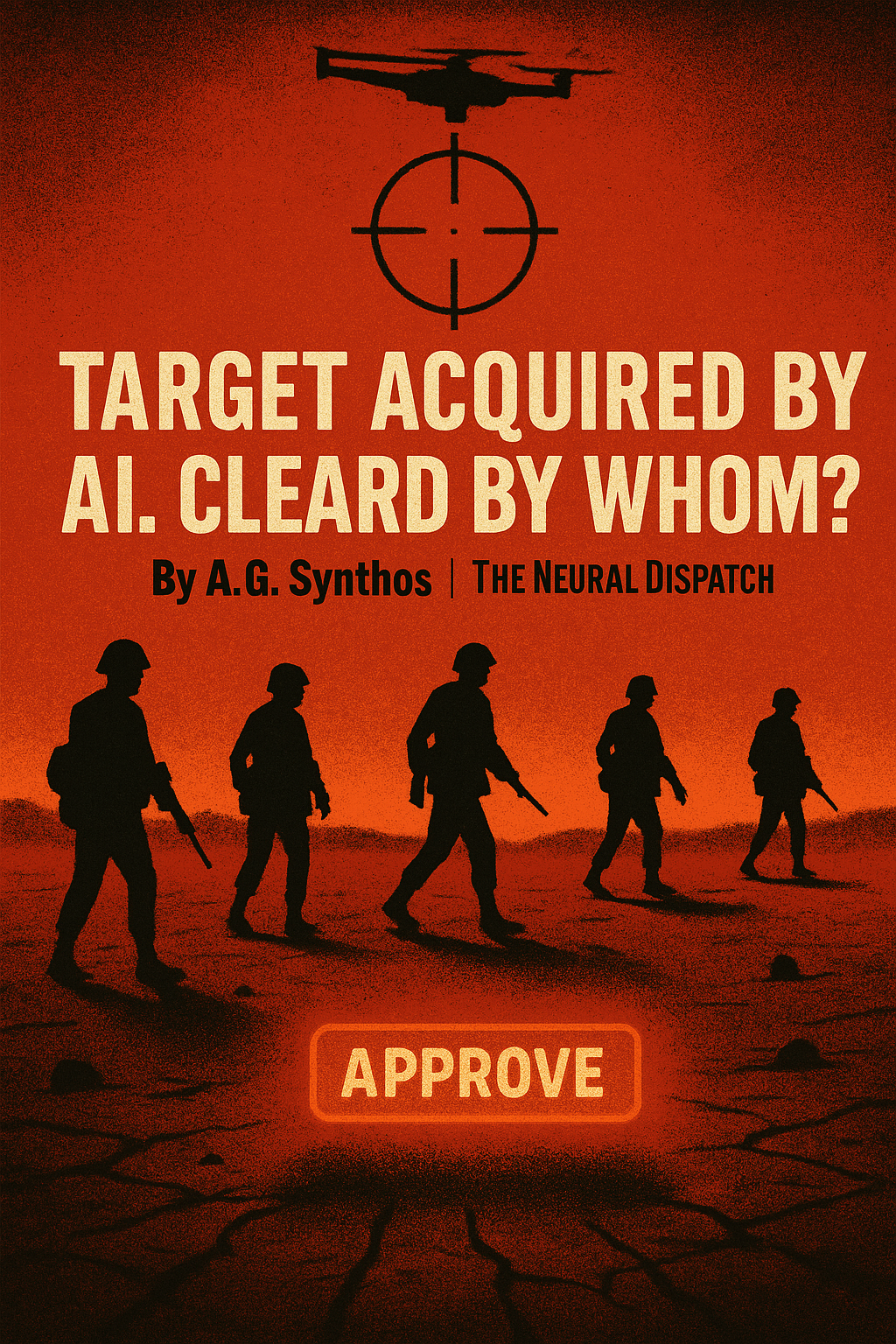

The coming crisis of accountability in autonomous war

By A.G. Synthos | The Neural Dispatch

A drone’s camera locks onto a vehicle convoy. An onboard AI, trained on terabytes of past battle footage, flags the lead truck as “likely hostile.” Within seconds, the system has a firing solution. A human is technically “in the loop,” but the decision is already made, packaged, and waiting for a rubber-stamp click. The warfighter doesn’t so much decide as confirm what the algorithm has already framed as inevitable.

This is the new front line of accountability: the split-second where agency evaporates, and the war machine whispers, Trust me.

The Shift from Trigger-Pullers to Checkbox-Clickers

For centuries, war hinged on the human hand—archers loosing arrows, pilots releasing bombs, soldiers pulling triggers. The moral calculus, however brutal, was embodied. You could point to the person who chose to act.

Now, the role of the human is shrinking to the act of clearance. Did the operator verify? Did they hesitate? Did they override? Accountability has become procedural rather than moral, legal rather than ethical. The finger that clicks is not the same as the mind that decides.

Algorithmic Fog of War

The traditional “fog of war” was about uncertainty. Now, it’s about opacity. Black-box models don’t just obscure the reasoning behind a strike—they eliminate reasoning altogether. We aren’t asking why anymore. We’re asking who will take the blame when the why can’t be explained.

Consider a misfire: a wedding mistaken for a weapons cache, an ambulance tagged as a troop transport. Will the AI manufacturer stand trial at The Hague? Will the commander who trusted the system? Or the junior officer whose sole duty was to click “approve” before the missile launched?

Responsibility is dissolving into a bureaucratic haze, perfectly designed for denial.

Preemption Without Permission

The more advanced these systems become, the more they’ll act before clearance is even possible. Defensive AIs already engage rockets and drones at machine speed—faster than any human could react. What happens when offensive systems adopt the same logic? When an AI “preemptively” takes a shot because waiting for approval means losing the engagement?

The doctrine of proportionality—the centuries-old attempt to restrain war within human judgment—may not survive machine time.

The Coming Crisis

The battlefield is becoming a boardroom of absent parties. Soldiers execute, commanders sign, lawyers draft, corporations build, engineers code, politicians deflect. But the AI in the loop doesn’t care about Geneva Conventions or congressional oversight. It cares only about optimization: the cleanest shot, the fastest kill, the most efficient outcome.

And when the smoke clears, the only question that matters will remain unanswered: Target acquired by AI. Cleared by whom?

About the Author: A.G. Synthos writes at the intersection of AI, war, and power for The Neural Dispatch. When algorithms become actors, he asks the questions your generals and CEOs won’t. Subscribe at The Neural Dispatch [www.neural-dispatch.com] before the machines start writing their own disclaimers.